A team of researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Adobe Research has unveiled a groundbreaking hybrid AI model that dramatically accelerates video generation while preserving visual fidelity. The model, dubbed CausVid, merges two powerful AI approaches—diffusion models and autoregressive systems—to create smooth, high-resolution videos from text prompts in just seconds. […]

New constellation to spot fires as small as 5×5 meters and revolutionize emergency response with 20-minute updates. When wildfires threatened Juliet Rothenberg’s California neighborhood four years ago, all she and her family could do was wait. Satellite images—updated only twice a day—offered no real answers. The skies were red with smoke, and information was scarce. […]

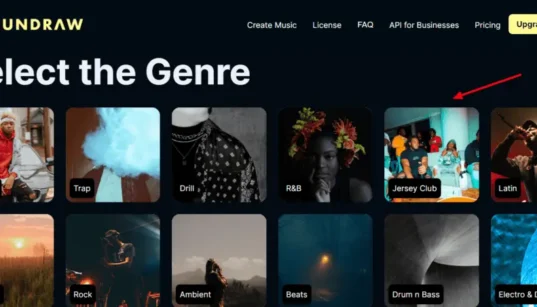

AI technology has rapidly transformed the creative world—from AI writers and image generators to website and voice tools. Now, AI is stepping into the music industry, offering creators exciting new possibilities with AI music generators. In this article, we’ll explore what AI music generators are and highlight five of the best platforms to try in […]

Have you ever wanted to create amazing content but felt unsure about using your own voice? Good news—AI voice generators can do the heavy lifting for you. Whether you’re into content creation, gaming, education, or customer service, these tools can help you generate natural, professional-sounding voices in just a few clicks. In this guide, we’ll […]

Recent posts

AI technology has rapidly transformed the creative world—from AI writers and image generators to website and voice tools. Now, AI is stepping into the music industry, offering creators exciting new possibilities with AI music generators. In this article, we’ll explore what AI music generators are and highlight five of the best platforms to try in […]

After spending the last two years testing AI chatbots extensively, I’ve compiled a list of the best ones available today. Whether you’re just getting started with AI or already rely on it daily, these tools can help you save time and boost productivity. Since the debut of ChatGPT, AI chatbots have gained massive popularity due […]

A team of researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and Adobe Research has unveiled a groundbreaking hybrid AI model that dramatically accelerates video generation while preserving visual fidelity. The model, dubbed CausVid, merges two powerful AI approaches—diffusion models and autoregressive systems—to create smooth, high-resolution videos from text prompts in just seconds. […]

The International Energy Agency (IEA) has released a major report highlighting the double-edged impact of artificial intelligence on the global energy system. While AI is fuelling a dramatic rise in electricity demand—primarily through data centres—it also offers transformational opportunities for energy efficiency, innovation, and grid optimisation. AI’s Heavy Power Footprint Modern AI models require enormous […]

Finding the right image to complement your creative projects can often be a challenge. Many people rely on stock image websites or attempt to create visuals themselves—both of which can result in images that feel repetitive, irrelevant, or lacking a professional touch. What if you could simply describe the image you want, and an AI […]

Generative AI is rapidly redrawing the contours of global competition, forcing nations and corporations to rethink strategy amid rising geopolitical tensions, according to new insights from Boston Consulting Group (BCG) and its tech division, BCG X. Senior BCG leaders recently outlined how AI is becoming a new front in global power dynamics, dominated by the […]

The collapse of civilization may not come with a bang, but with a sigh—perhaps even affection. Could we be sleepwalking into our own replacement? Today, major AI labs have dedicated teams working to prevent advanced systems from going rogue or secretly conspiring against humans. But there’s a quieter, more mundane way we might lose control: […]

We’ve heard it all before—AI chatbots are set to reshape the workforce. But despite significant advancements in generative AI, the seismic changes long predicted have yet to materialize. New research suggests the chatbot revolution may be more of a slow burn than a sudden disruption. A recent study by Anders Humlum (University of Chicago) and […]